Opengl实时渲染器(一)架构搭建

- 05 4 月, 2023

- by

- pladmin

该工程已经开源:

GitHub – Puluomiyuhun/PL_RealtimeRenderer: A renderer made by OpenGL.

引言

之前用C++写过软光栅和软光追,又用optix撸了一遍硬光追,那么是时候也来复现一下硬光栅了。硬光栅可能是这四个渲染器里开发得最舒服的一个(图形api已经把各种功能和模块封装得很完善了,不用像C++渲染器那样所有功能都必须手撸;而且不需要像optix光追那样分析各种数学公式以追求物理正确性)本篇我决定用opengl来复现一个硬光栅渲染器,由于我对opengl的掌握并没有到可以盲写的境界,所以我决定主要参考learnOpengl(LearnOpenGL CN (learnopengl-cn.github.io))的思路和代码,完成一个基础功能健全的realtime-tiny-renderer。

首先我们按照learnOpengl的配置方法,选择构建、编译glfw库,生成opengl3.3版本对应的glad库,并将它们链接到我们的opengl项目中。

Part1. 窗口

每个渲染器的第一步都是搭建管线,这是最复杂也是最枯燥的一步。在建立管线之前,我们先把窗口绑定好。建立一个main.cpp,写入如下代码:

#include <glad/glad.h>

#include <GLFW/glfw3.h>

#include <iostream>

/*重构窗口大小*/

void framebuffer_size_callback(GLFWwindow* window, int width, int height)

{

glViewport(0, 0, width, height);

}

/*键盘输入响应函数*/

void processInput(GLFWwindow* window)

{

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

glfwSetWindowShouldClose(window, true);

}

int main()

{

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3); //主版本:3

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3); //次版本:3

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE); //设置为核心模式

GLFWwindow* window = glfwCreateWindow(800, 600, "LearnOpenGL", NULL, NULL); //开启窗口

if (window == NULL)

{

std::cout << "Failed to create GLFW window" << std::endl;

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window); //将窗口上下文绑定为当前线程的上下文

glfwSetFramebufferSizeCallback(window, framebuffer_size_callback); //绑定窗口大小改变时调用的函数

if (!gladLoadGLLoader((GLADloadproc)glfwGetProcAddress)) //初始化glad

{

std::cout << "Failed to initialize GLAD" << std::endl;

return -1;

}

while (!glfwWindowShouldClose(window)) //开始渲染循环

{

processInput(window); //自定义的检测键盘输入函数

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glfwSwapBuffers(window); //双缓冲,交换前后buffer

glfwPollEvents(); //检查事件队列中是否有事件传达

}

glfwTerminate(); //结束线程,释放资源

return 0;

}

非常简单基础的窗口初始化,流程是初始化glfw-初始化glad-开启窗口-设置回调-渲染循环-结束程序。基本没有太多要解释的内容。

Part2. 管线

接下来我们开始定义着色器、搭建管线来绘制三角形,用的还是VAO、VBO、EBO那套东西。

#include <glad/glad.h>

#include <GLFW/glfw3.h>

#include <iostream>

/*每个顶点包含坐标(float*3)、颜色(float*3)*/

float vertices1[] = {

-0.5f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, 0.0f, 0.0f, 1.0f, 0.0f,

0.0f, 0.5f, 0.0f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 0.0f,

};

float vertices2[] = {

0.6f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f,

1.0f, -0.5f, 0.0f, 0.0f, 1.0f, 0.0f,

0.8f, 0.5f, 0.0f, 0.0f, 0.0f, 1.0f,

1.0f, 0.5f, 0.0f, 1.0f, 0.0f, 0.0f,

};

unsigned int indices1[] = {

0, 1, 2,

0, 2, 3,

};

unsigned int indices2[] = {

0, 1, 2,

1, 2, 3,

};

const char* vertexShaderSource = "#version 330 core\n"

"layout (location = 0) in vec3 aPos;\n"

"layout (location = 1) in vec3 aCol;\n"

"out vec4 color;\n"

"void main()\n"

"{\n"

" gl_Position = vec4(aPos.x, aPos.y, aPos.z, 1.0);\n"

" color = vec4(aCol,0);\n"

"}\0";

const char* fragmentShaderSource = "#version 330 core\n"

"in vec4 color;\n"

"out vec4 FragColor;\n"

"void main()\n"

"{\n"

" FragColor = color;\n"

"}\0";

unsigned int VAO[2];

unsigned int VBO[2];

unsigned int EBO[2];

unsigned int shaderProgram;

void bingdingShader() {

/*创建顶点着色器*/

unsigned int vertexShader;

vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, &vertexShaderSource, NULL);

glCompileShader(vertexShader);

/*创建片元着色器*/

unsigned int fragmentShader;

fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL);

glCompileShader(fragmentShader);

/*创建管线*/

shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glLinkProgram(shaderProgram);

glDeleteShader(vertexShader);

glDeleteShader(fragmentShader);

/*绑定VAO、VBO*/

glGenVertexArrays(2, VAO); //绑定N个顶点组,并为其绑定了N个数组对象id号存入VAO

glGenBuffers(2, VBO); //生成N个缓冲区,并为其绑定了N个缓冲区对象id号存入VBO

glGenBuffers(2, EBO); //生成N个索引组,并为其绑定了N个索引组对象id号存入EBO

glBindVertexArray(VAO[0]); //这里绑定0号VAO,表示准备对0号VBO进行属性拆解

glBindBuffer(GL_ARRAY_BUFFER, VBO[0]); //将生成的缓冲区绑定到GL_ARRAY_BUFFER目标上

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices1), vertices1, GL_STATIC_DRAW); //将顶点信息写入GL_ARRAY_BUFFER目标

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, EBO[0]);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, sizeof(indices1), indices1, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)0); //设置VAO的0号属性指针,并定义该属性占用字节数、偏移处

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)(3 * sizeof(float))); //设置VAO的1号属性指针,并定义该属性占用字节数、偏移处

glEnableVertexAttribArray(0); //将0号顶点属性激活为参数

glEnableVertexAttribArray(1);

glBindVertexArray(VAO[1]);

glBindBuffer(GL_ARRAY_BUFFER, VBO[1]);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices2), vertices2, GL_STATIC_DRAW);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, EBO[1]);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, sizeof(indices2), indices2, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)0); //设置VAO的0号属性指针,并定义该属性占用字节数、偏移处

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)(3 * sizeof(float))); //设置VAO的1号属性指针,并定义该属性占用字节数、偏移处

glEnableVertexAttribArray(0); //将0号顶点属性激活为参数

glEnableVertexAttribArray(1);

}

/*重构窗口大小*/

void framebuffer_size_callback(GLFWwindow* window, int width, int height)

{

glViewport(0, 0, width, height);

}

/*键盘输入响应函数*/

void processInput(GLFWwindow* window)

{

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

glfwSetWindowShouldClose(window, true);

}

int main()

{

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3); //主版本:3

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3); //次版本:3

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE); //设置为核心模式

GLFWwindow* window = glfwCreateWindow(800, 600, "LearnOpenGL", NULL, NULL); //开启窗口

if (window == NULL)

{

std::cout << "Failed to create GLFW window" << std::endl;

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window); //将窗口上下文绑定为当前线程的上下文

glfwSetFramebufferSizeCallback(window, framebuffer_size_callback); //绑定窗口大小改变时调用的函数

if (!gladLoadGLLoader((GLADloadproc)glfwGetProcAddress)) //初始化glad

{

std::cout << "Failed to initialize GLAD" << std::endl;

return -1;

}

bingdingShader();

while (!glfwWindowShouldClose(window)) //开始渲染循环

{

processInput(window); //自定义的检测键盘输入函数

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shaderProgram);

glBindVertexArray(VAO[0]);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0);

glBindVertexArray(VAO[1]);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0);

glfwSwapBuffers(window); //双缓冲,交换前后buffer

glfwPollEvents(); //检查事件队列中是否有事件传达

}

glDeleteVertexArrays(2, VAO);

glDeleteBuffers(2, VBO);

glDeleteProgram(shaderProgram);

glfwTerminate(); //结束线程,释放资源

return 0;

}

该标的注释我都标了。简单说就是,我们创造了两个矩形,分别将其坐标存在两个vertices中。这里我先不解释着色器,先说下VAO、VBO、EBO。

VBO就是顶点的数据缓冲区,里面可以按序存放每个顶点的所有属性(例如坐标、法线、顶点色、uv等等),我们直接从vertices中将每个顶点的位置信息载入到VBO绑定的缓冲区即可。然而VBO并不知道vertices中顶点有几个属性、的每个属性占几个字节,这样传入shader的时候,shader也不知道该如何分解这些属性,那怎么办呢?

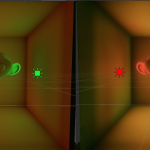

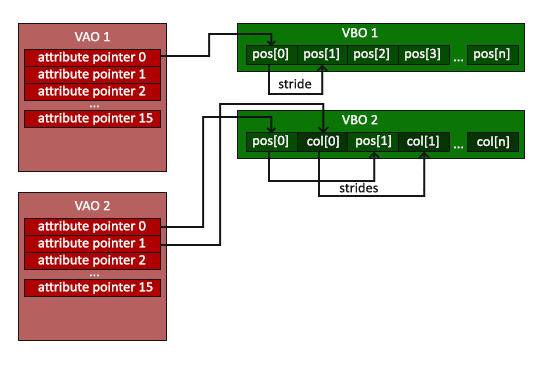

这里就需要VAO出马了。VAO一共存了16个顶点属性指针,每个指针对应1个顶点属性。我们调用glVertexAttribPointer就可以告诉VAO中K号指针 所对应的K号属性 处于每个顶点信息中的偏移位置,以及该属性占几个字节,这样就成功地把VBO中每个顶点的一坨数据 分解成各个有意义的属性了。如下图:

如图所示,VBO就是把所有顶点的所有信息依次排列在了一起,而VAO的每个指针指向每个顶点的一个属性信息。图中VBO1中只有一个属性:坐标,那么VAO1只需要绑定一个0号属性指针,通过glVertexAttribPointer接口定义该属性(即坐标)需要3个float来描述,偏移是0;VBO2中有两个属性:坐标和顶点色,那么VAO2就需要绑定一个0号、一个1号属性指针,然后定义坐标属性和颜色属性的偏移位置和占用字节数。

所以简而言之就是,VBO就是顶点若干个属性的堆积,而VAO内定义了若干个指针,分别指向VBO顶点数据中的各个属性,当我们要取第N个顶点的第M个属性时,VAO可以根据我们定义的M号属性规则,直接将第M号指针指向对应位置,取出该顶点的对应属性。所以我们说VBO就是源数据,而VAO就是将源数据拆解成不同的属性数据。

还有一个东西是EBO,这个就是三角形索引,描述每个三角形由VBO中的哪几号顶点构成,这个东西用了无数回了就不赘述了。(最后还要提一点,当我们用glBindVertexArray(VAO[0])绑定VAO[0]后,后面处理的VBO[0]和EBO[0]会直接和当前的VAO[0]互相绑死,所以在渲染循环中,我们只给出VAO[0],opengl就知道要选用VBO[0]和EBO[0]作为配套数据了。)

对于shader,顶点着色器和片元着色器在我最早的c++软光栅渲染器中详细讲过了,不再赘述定义,这里我们的顶点着色器只收入一个0号属性,就是我们每个顶点的position属性,我们将它定义成aPos,并交给顶点着色器进行坐标定位,同时将传入的颜色信息输出给片元着色器;片元着色器接收到颜色信息后直接着色。

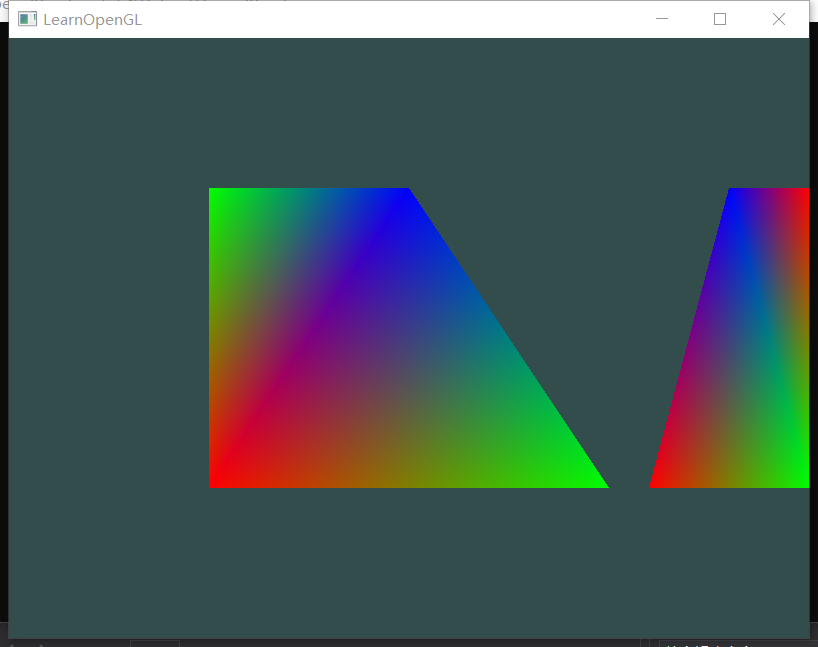

最后得到如下效果:

将着色器的代码分别写入.vsh和.fsh文件,然后定义一个myShader类用来打开shader文件、编译、搭建管线,这样编写shader就变得更方便了。

Part3. 纹理

我们创建一个myTexture.h来处理纹理导入和绑定逻辑:

#pragma once

#include <glad/glad.h>;

#define STB_IMAGE_IMPLEMENTATION

#include "stb_image.h"

unsigned int loadTexture(const char* texturePath) {

unsigned int texture;

glGenTextures(1, &texture);

glBindTexture(GL_TEXTURE_2D, texture);

// 为当前绑定的纹理对象设置环绕、过滤方式

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// 加载并生成纹理

int width, height, nrChannels;

unsigned char* data = stbi_load(texturePath, &width, &height, &nrChannels, 0);

if (data)

{

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

return 0;

stbi_image_free(data);

return texture;

}

绑定纹理缓冲区、设置纹理环绕和滤波方式,然后通过stb_image库将图片像素读取进来,导入到对应的纹理缓冲区中,并生成mipmap。最后将该纹理的缓冲区id号返回。

既然要显示纹理,那么就离不开采样,而采样要求模型顶点必须具备uv值:

/*每个顶点包含坐标(float*3)、颜色(float*3)、uv(float*2)*/

float vertices1[] = {

-0.5f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f,

0.5f, -0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f,

0.0f, 0.5f, 0.0f, 0.0f, 0.0f, 1.0f, 0.5f, 1.0f,

-0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f,

};

float vertices2[] = {

0.6f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f,

1.0f, -0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f,

0.8f, 0.5f, 0.0f, 0.0f, 0.0f, 1.0f, 0.5f, 1.0f,

1.0f, 0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f,

};

然后我们要将uv值作为2号属性绑定进VAO。注意此时坐标占3个字节,顶点颜色占3个字节,uv占2个字节,因此第五个参数(顶点间隔)要改成8*sizeof(float),2号属性的第六个参数(偏移量)要改成6*(float):

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 8 * sizeof(float), (void*)0); //设置VAO的0号属性指针,并定义该属性占用字节数、偏移处

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 8 * sizeof(float), (void*)(3 * sizeof(float))); //设置VAO的1号属性指针,并定义该属性占用字节数、偏移处

glVertexAttribPointer(2, 2, GL_FLOAT, GL_FALSE, 8 * sizeof(float), (void*)(6 * sizeof(float))); //设置VAO的2号属性指针,并定义该属性占用字节数、偏移处

glEnableVertexAttribArray(0); //将0号顶点属性激活为参数

glEnableVertexAttribArray(1);

glEnableVertexAttribArray(2);

在主循环中,我们创建并绑定2个物体的纹理:

TEX[0] = loadTexture("Albedo1.jpg");

TEX[1] = loadTexture("Albedo2.jpg");

while (!glfwWindowShouldClose(window)) //开始渲染循环

{

processInput(window); //自定义的检测键盘输入函数

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shaderProgram);

glBindTexture(GL_TEXTURE_2D, TEX[0]);

glBindVertexArray(VAO[0]);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0);

glBindTexture(GL_TEXTURE_2D, TEX[1]);

glBindVertexArray(VAO[1]);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, 0);

glfwSwapBuffers(window); //双缓冲,交换前后buffer

glfwPollEvents(); //检查事件队列中是否有事件传达

}

这样在shader端就可以同时拿到要采样的纹理 以及顶点和片元的uv值了。shader可以这样写:

///////////////////////vertex shader///////////////////////

#version 330 core

layout (location = 0) in vec3 aPos;

layout (location = 1) in vec3 aCol;

layout (location = 2) in vec2 aUV;

out vec4 color;

out vec2 texcoord;

void main()

{

gl_Position = vec4(aPos, 1.0);

color = vec4(aCol,0);

texcoord = aUV;

}

///////////////////////fragment shader///////////////////////

#version 330 core

in vec4 color;

in vec2 texcoord;

out vec4 FragColor;

uniform sampler2D ourTexture;

void main()

{

FragColor = texture(ourTexture, texcoord);

}

顶点着色器就是直接把uv值传递出去就好了,在片元着色器中,可以拿到对应纹理的采样器ourTexture,直接通过texture方法在ourTexture上面进行对应uv的采样,便获得了纹理颜色。当然这里也可以再乘一个顶点色color,就能呈现基础色和纹理颜色混合的效果了。(但是混合后很丑,所以我没做)

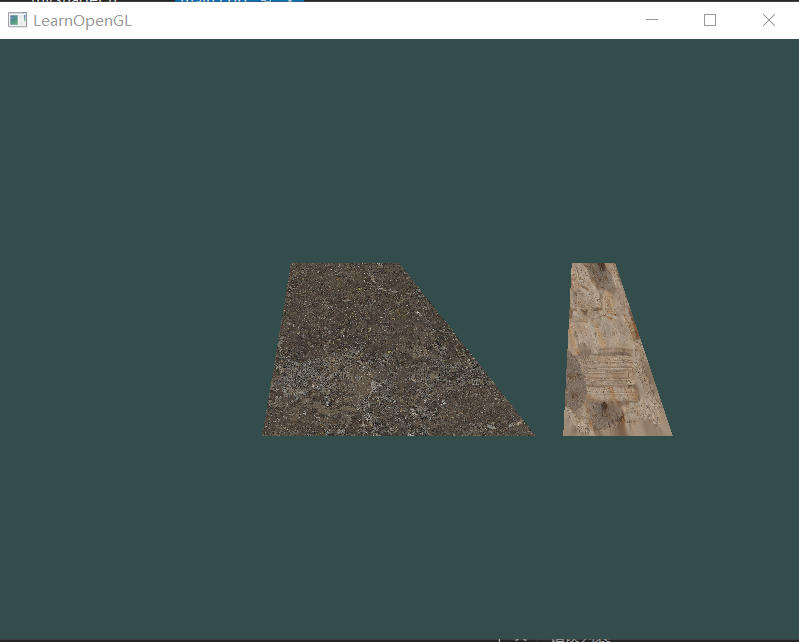

效果如下:

Part4. 空间变换

我们不打算给本渲染器手搓数学库,直接引用glm数学库。这节我们要完成空间变换,那么就会涉及到矩阵的计算和传递,因此首先在myShader.h中定义一个传递uniform矩阵变量的方法:

void setMatrix(const std::string& name, glm::mat4 mat) const {

glUniformMatrix4fv(glGetUniformLocation(ID, name.c_str()), 1, GL_FALSE, glm::value_ptr(mat));

}

这个方法表示,在客户端把某个矩阵传入到shader的uniform变量中。

接下来我们研究一下空间变换,空间变换的流程我在C++光栅化软渲染器(下)渲染篇 – PULUO开头中有过介绍,本质上就是给模型空间的顶点连续乘以模型-世界矩阵、世界-相机矩阵、裁剪矩阵,所以我们本质上就是要计算这三个矩阵是什么样的。

首先,模型-世界矩阵是最简单的,已知物体在世界空间中的坐标、旋转、缩放度,那么:模型-世界矩阵=平移矩阵*旋转矩阵*缩放矩阵;(注意,矩阵乘法的计算顺序是从右向左的)

然后是世界-相机矩阵,这个相当于模型-世界矩阵的逆过程,已知相机在世界空间中的坐标、旋转、缩放度,那么世界-相机矩阵=逆缩放矩阵*逆旋转矩阵*逆平移矩阵;

最后是裁剪矩阵,这个我们直接定义一下fovy张角、视口宽高比、近视裁剪平面距离和原始裁剪平面距离,然后调用glm::perspective就能得到对应的透视矩阵了。

这里我们希望模型在世界中有一个45°的倾斜,然后我们把抽象的相机放在(0,0,3)处(因为相机朝向z值更小的方向,所以如果想看到z值为0的模型,相机的z值必须>0),那么根据上述的定义,模型-世界矩阵就是旋转45°的矩阵;世界-相机矩阵是(0,0,-3)的平移矩阵(注意是-3而不是3,因为世界-相机矩阵本质上是相机平移缩放的逆变换);然后设置一个张角为45度、宽高比为窗口宽高比、近视裁剪平面距离为0.1f、原始裁剪平面距离为100.0f的透视矩阵。最后将这三个矩阵全部传入shader:

while (!glfwWindowShouldClose(window)) //开始渲染循环

{

processInput(window); //自定义的检测键盘输入函数

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shaderProgram);

glm::mat4 model = glm::identity<glm::mat4>();

model = glm::rotate(model, glm::radians(-45.0f), glm::vec3(1.0f, 0.0f, 0.0f));

glm::mat4 view = glm::identity<glm::mat4>();

view = glm::translate(view, glm::vec3(0.0f, 0.0f, -3.0f));

glm::mat4 projection = glm::identity<glm::mat4>();

projection = glm::perspective(glm::radians(45.0f), screenWidth / screenHeight, 0.1f, 100.0f);

shader.setMatrix("model", model);

shader.setMatrix("view", view);

shader.setMatrix("projection", projection);

...

}

那么我们的顶点着色器就可以接收到这三个矩阵了,直接对顶点的原始坐标连续乘以这三个矩阵即可:

#version 330 core

layout (location = 0) in vec3 aPos;

layout (location = 1) in vec3 aCol;

layout (location = 2) in vec2 aUV;

out vec4 color;

out vec2 texcoord;

uniform mat4 model;

uniform mat4 view;

uniform mat4 projection;

void main()

{

gl_Position = projection * view * model * vec4(aPos, 1.0);

color = vec4(aCol,0);

texcoord = aUV;

}

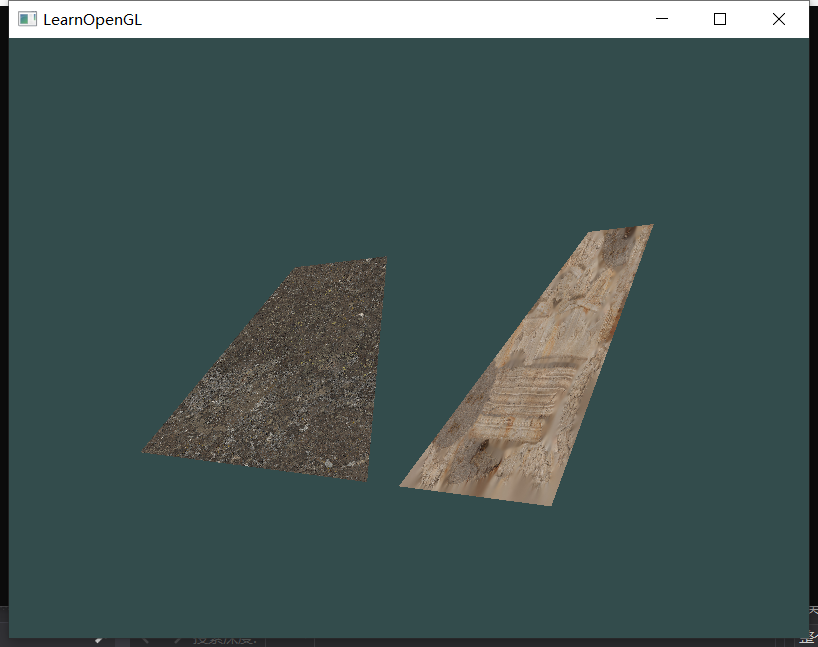

最后可以看到如下结果:

已经拥有透视的立体感了。

Part.5 自由相机

上一节我们其实定义了一个抽象的相机,将他的坐标设置在了(0,0,3)处。这一节我们就想办法创建一个描述更合理的完全体相机。

#pragma once

#include <glad/glad.h>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

struct Euler {

double pitch;

double yaw;

double roll;

};

class myCamera {

public:

myCamera(glm::vec3 Pos, glm::vec3 Front, glm::vec3 Up, Euler eu, double fov_) {

cameraPos = Pos;

cameraFront = Front;

cameraUp = Up;

cameraEuler = eu;

fov = fov_;

}

glm::mat4 getView() {

return glm::lookAt(cameraPos, cameraPos + cameraFront, cameraUp);

}

glm::vec3 cameraPos;

glm::vec3 cameraFront;

glm::vec3 cameraUp;

Euler cameraEuler;

float fov = 45.0f;

};

相机主要需要定义:位置坐标、前向向量、头顶向量、欧拉角、fovy张角。前三者基本上可以决定一个相机的视口,欧拉角主要是用来决定cameraFront前向向量的,最后的fovy主要决定透视矩阵的fovy值。其中getView函数就是调用lookAt矩阵,从而得到空间变换所需要的相机矩阵。

在main.cpp中,定义一个全局指针myCamera* camera,然后在main函数中示例一个相机对象:

camera = new myCamera(glm::vec3(0.0f, 0.0f, 3.0f), glm::vec3(0.0f, 0.0f, -1.0f), glm::vec3(0.0f, 1.0f, 0.0f), Euler{ 0.0f,-90.0f,0.0f }, 45.0f);

while (!glfwWindowShouldClose(window)) //开始渲染循环

{

processInput(window); //自定义的检测键盘输入函数

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shaderProgram);

glm::mat4 model = glm::identity<glm::mat4>();

model = glm::rotate(model, glm::radians(-45.0f), glm::vec3(1.0f, 0.0f, 0.0f));

glm::mat4 view = camera->getView();

glm::mat4 projection = glm::identity<glm::mat4>();

projection = glm::perspective(camera->fov, screenWidth / screenHeight, 0.1f, 100.0f);

...

这里我们在场景中我们拥有了一个相机实例,相机矩阵由getView函数直接得到;同时透视矩阵的fovy张角由相机的fovy决定。

同时,我们还绑定了鼠标移动和鼠标滚轮的回调,用来操纵相机的位置、旋转和fovy值:

...绑定回调:

glfwSetCursorPosCallback(window, mouse_callback);

glfwSetInputMode(window, GLFW_CURSOR, GLFW_CURSOR_DISABLED);

glfwSetScrollCallback(window, scroll_callback);

...

/*键盘输入响应函数*/

void processInput(GLFWwindow* window)

{

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

glfwSetWindowShouldClose(window, true);

float cameraSpeed = 0.001f; // adjust accordingly

if (glfwGetKey(window, GLFW_KEY_W) == GLFW_PRESS)

camera->cameraPos += cameraSpeed * camera->cameraFront;

if (glfwGetKey(window, GLFW_KEY_S) == GLFW_PRESS)

camera->cameraPos -= cameraSpeed * camera->cameraFront;

if (glfwGetKey(window, GLFW_KEY_A) == GLFW_PRESS)

camera->cameraPos -= glm::normalize(glm::cross(camera->cameraFront, camera->cameraUp)) * cameraSpeed;

if (glfwGetKey(window, GLFW_KEY_D) == GLFW_PRESS)

camera->cameraPos += glm::normalize(glm::cross(camera->cameraFront, camera->cameraUp)) * cameraSpeed;

}

/*鼠标事件回调函数*/

void mouse_callback(GLFWwindow* window, double xpos, double ypos)

{

if (firstMouse)

{

lastX = xpos;

lastY = ypos;

firstMouse = false;

return;

}

float xoffset = xpos - lastX;

float yoffset = lastY - ypos;

lastX = xpos;

lastY = ypos;

float sensitivity = 0.02;

xoffset *= sensitivity;

yoffset *= sensitivity;

camera->cameraEuler.yaw += xoffset;

camera->cameraEuler.pitch += yoffset;

if (camera->cameraEuler.pitch > 89.0f)

camera->cameraEuler.pitch = 89.0f;

if (camera->cameraEuler.pitch < -89.0f)

camera->cameraEuler.pitch = -89.0f;

glm::vec3 front;

front.x = cos(glm::radians(camera->cameraEuler.yaw)) * cos(glm::radians(camera->cameraEuler.pitch));

front.y = sin(glm::radians(camera->cameraEuler.pitch));

front.z = sin(glm::radians(camera->cameraEuler.yaw)) * cos(glm::radians(camera->cameraEuler.pitch));

camera->cameraFront = glm::normalize(front);

}

/*滚轮事件回调函数*/

void scroll_callback(GLFWwindow* window, double xoffset, double yoffset)

{

float sensitivity = 0.1;

if (camera->fov >= 1.0f && camera->fov <= 45.0f)

camera->fov -= yoffset * sensitivity;

if (camera->fov <= 1.0f)

camera->fov = 1.0f;

if (camera->fov >= 45.0f)

camera->fov = 45.0f;

}

当键盘按下WASD时,相机的位置发生改动;当鼠标在窗口中移动时,相机的欧拉角发生相应变化,从而操控cameraFront变量的方向;当鼠标滚轮发生滑动时,相机的fovy值发生改变。这样我们就可以在场景中漫游了:

入门篇基本上就结束了。由于之前有了软光栅和光追的基础,所以这一篇的内容理解起来都比较轻松,从开始看learnOpengl到现在完成入门篇基本只花了大概一下午的时间。不过我还是想对代码做一点优化,例如将网格信息封装起来,让架构看起来更清晰一些。

Part6. 网格信息封装

首先我们创建一个Mesh.h的文件和类,把这些乱七八糟的顶点数据放进去,同时我们也考虑下生成一个立方体(毕竟现在已经步进三维世界了,只做两个单面三角形显得比较蠢)

前方Mesh.h屎山预警:

#pragma once

#include <glad/glad.h>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

#include <vector>

using namespace std;

struct vertice {

glm::vec3 pos;

glm::vec2 uv;

glm::vec3 normal;

};

class myMesh {

public:

myMesh() {

}

myMesh(glm::vec3 pos_offset, glm::vec3 size) {

Cube(pos_offset, size);

}

void Cube(glm::vec3 pos_offset,glm::vec3 size) {

size_of_each_vertice = 8;

cnt_vertices = 36;

cnt_indices = 36;

vertice_struct.clear();

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(0.0f,0.0f,-1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, -0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(0.0f,0.0f,-1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, -0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(0.0f,0.0f,-1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, -0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(0.0f,0.0f,-1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(0.0f,0.0f,-1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(0.0f,0.0f,-1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, 0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(0.0f,0.0f,1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(0.0f,0.0f,1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(0.0f,0.0f,1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(0.0f,0.0f,1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, 0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(0.0f,0.0f,1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, 0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(0.0f,0.0f,1.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(-1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, -0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(-1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(-1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(-1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, 0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(-1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(-1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, -0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, 0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(1.0f,0.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(0.0f,-1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, -0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(0.0f,-1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(0.0f,-1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, -0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(0.0f,-1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, 0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(0.0f,-1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, -0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(0.0f,-1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(0.0f,1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, -0.5f),glm::vec2(1.0f, 1.0f),glm::vec3(0.0f,1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(0.0f,1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(0.5f, 0.5f, 0.5f),glm::vec2(1.0f, 0.0f),glm::vec3(0.0f,1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, 0.5f),glm::vec2(0.0f, 0.0f),glm::vec3(0.0f,1.0f,0.0f) });

vertice_struct.push_back(vertice{ glm::vec3(-0.5f, 0.5f, -0.5f),glm::vec2(0.0f, 1.0f),glm::vec3(0.0f,1.0f,0.0f) });

for (int i = 0; i < cnt_vertices; i++) {

vertice_struct[i].pos = vertice_struct[i].pos * size + pos_offset;

}

vertices = new float[cnt_vertices * size_of_each_vertice];

for (int i = 0; i < cnt_vertices; i++) {

for (int j = 0; j < 3; j++)

vertices[i * size_of_each_vertice + j] = vertice_struct[i].pos[j];

for (int j = 0; j < 2; j++)

vertices[i * size_of_each_vertice + j + 3] = vertice_struct[i].uv[j];

for (int j = 0; j < 3; j++)

vertices[i * size_of_each_vertice + j + 5] = vertice_struct[i].normal[j];

}

indices = new unsigned int[cnt_indices];

for (unsigned int i = 0; i < cnt_indices; i++)

indices[i] = i;

}

float* getVertices() {

return vertices;

}

unsigned int* getIndices() {

return indices;

}

int getSize() {

return size_of_each_vertice;

}

int getCntVertices() {

return cnt_vertices;

}

int getCntIndices() {

return cnt_indices;

}

private:

float* vertices;

unsigned int* indices;

int size_of_each_vertice;

int cnt_vertices;

int cnt_indices;

vector<vertice> vertice_struct;

};

vertices和indices就是能直接被绑定的float[]和unsigned int[]格式。但是为了直观,我又定义了一个vertice struct,把顶点的各个属性分离了一下,这样在分析模型顶点的坐标、uv、法线的时候能更直观一些。

然后写了一个制作立方体的函数,将每个立方体的36个顶点的坐标、uv、法线做了一个预设,然后将他们规格化到vertices和indices里面,方便opengl直接对其做绑定。size_of_each_vertice表示的是一个顶点信息包含了几个数值单位(例如pos+uv+normal就是8个数值单位,注意是数值单位不是字节),cnt_vertices和cnt_indices就表示了这个mesh一共有几个顶点,以及索引总长度是多少。

在main函数里也要相应做出改动:

void bindData() {

myMesh* mesh1 = new myMesh(glm::vec3(-1, 0, 0), glm::vec3(1, 1, 1));

myMesh* mesh2 = new myMesh(glm::vec3(1, 0, 0), glm::vec3(1, 1, 1));

/*绑定VAO、VBO*/

glGenVertexArrays(2, VAO); //绑定N个顶点组,并为其绑定了N个数组对象id号存入VAO

glGenBuffers(2, VBO); //生成N个缓冲区,并为其绑定了N个缓冲区对象id号存入VBO

glGenBuffers(2, EBO); //生成N个索引组,并为其绑定了N个索引组对象id号存入EBO

glBindVertexArray(VAO[0]); //这里绑定0号VAO,表示准备对0号VBO进行属性拆解

glBindBuffer(GL_ARRAY_BUFFER, VBO[0]); //将生成的缓冲区绑定到GL_ARRAY_BUFFER目标上

glBufferData(GL_ARRAY_BUFFER, mesh1->getCntVertices() * mesh1->getSize() * sizeof(float), mesh1->getVertices(), GL_STATIC_DRAW); //将顶点信息写入GL_ARRAY_BUFFER目标

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, EBO[0]);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, mesh1->getCntIndices() * sizeof(unsigned int), mesh1->getIndices(), GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, mesh1->getSize() * sizeof(float), (void*)0); //设置VAO的0号属性指针,并定义该属性占用字节数、偏移处

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, mesh1->getSize() * sizeof(float), (void*)(3 * sizeof(float))); //设置VAO的1号属性指针,并定义该属性占用字节数、偏移处

glVertexAttribPointer(2, 3, GL_FLOAT, GL_FALSE, mesh1->getSize() * sizeof(float), (void*)(5 * sizeof(float))); //设置VAO的2号属性指针,并定义该属性占用字节数、偏移处

glEnableVertexAttribArray(0); //将0号顶点属性激活为参数

glEnableVertexAttribArray(1);

glEnableVertexAttribArray(2);

...

注意绑定VBO的时候,申请的字节数应该是 顶点总数量*一个顶点信息包含的数值单位个数*单个数值单位的字节数。如果申请的字节数写少了,最后立方体就会缺几块。

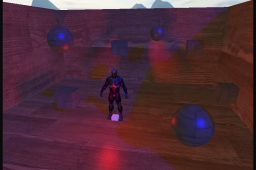

最后我们加一下深度缓冲,保证靠后的片元能被靠前的片元剔除掉。

glEnable(GL_DEPTH_TEST);

...

while (!glfwWindowShouldClose(window)) //开始渲染循环

{

processInput(window); //自定义的检测键盘输入函数

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

...

最后效果如下: